In today’s digital world, building a scalable architecture is a crucial requirement for modern software systems. Whether you are running a small startup or managing an enterprise-grade platform, the ability to scale efficiently ensures that your system can handle fluctuating demand while maintaining high availability and performance.

Before diving into the technical aspects of horizontal scaling, it’s essential to understand the core principles of scalability that guide us in building a scalable system. Two key principles form the foundation of scalable architecture: Decentralization and Independence. These principles help us design systems that are not only scalable but also resilient and fault-tolerant.

The Principle of Decentralization

Decentralization is the first and most critical principle of building a scalable system. It means breaking down a monolithic system into smaller, specialized components that can work independently to perform specific tasks.

In a monolithic architecture, a single component or service is responsible for handling all types of tasks—processing requests, managing data, and handling business logic. While this design may be easier to build initially, it quickly becomes a bottleneck when the system needs to scale.

Why Monolithic Architecture is an Anti-Pattern for Scalability

A monolithic system can only scale vertically, meaning you need to upgrade the hardware—more powerful CPUs, more memory, and faster storage—to handle increasing load. This approach has significant limitations:

- Cost Inefficiency: Upgrading hardware is expensive and offers diminishing returns.

- Limited Scalability: Even the most powerful hardware has physical limits.

- Single Point of Failure: If the monolithic component fails, the entire system goes down.

For true horizontal scalability, you need to adopt a decentralized architecture. In a decentralized system, different tasks are distributed among multiple specialized components or microservices. Each service is responsible for a specific function and can scale independently of others.

Example of Decentralization

Consider an e-commerce application. In a monolithic design, a single service would handle user authentication, product search, payment processing, and order fulfillment. In a decentralized system, each of these functions would be managed by a separate microservice:

- Authentication Service for handling user login and security.

- Product Catalog Service for managing product search and listings.

- Payment Service for processing payments.

- Order Service for managing orders and fulfillment.

This separation allows each service to scale independently based on demand. For instance, during a sale event, the Product Catalog Service may need to scale more aggressively than the Authentication Service.

The Principle of Independence

While decentralization provides the foundation for horizontal scalability, it is only effective if the individual components can operate independently without constant coordination. Independence reduces the chances of creating new bottlenecks as the system scales.

Why Independence Matters for Scalable Architecture

Imagine a system with multiple worker components responsible for processing tasks. If these workers rely on a centralized coordinator for task assignment or shared data access, the coordinator can quickly become a bottleneck. As the number of workers increases, the load on the coordinator grows proportionally. Eventually, the coordinator will become overwhelmed, limiting the scalability of the entire system.

The solution? Minimize coordination and shared resources to allow workers to function as autonomously as possible.

How to Achieve Independence

- Avoid Shared State: Reduce or eliminate shared state between components. For example, instead of multiple services writing to a single database, consider using independent data stores for each service.

- Event-Driven Architecture: Use event-driven communication patterns, where services exchange information through events rather than direct calls. This decouples services and minimizes the need for coordination.

- Stateless Workers: Design your worker components to be stateless, meaning they do not rely on internal state to function. Stateless workers can be replicated easily, improving scalability and fault tolerance.

- Optimistic Concurrency: In scenarios where shared resources are unavoidable, use optimistic concurrency techniques to reduce conflicts and contention.

Applying Decentralization and Independence Together

In building scalable systems, applying decentralization and independence together is not just a best practice—it’s essential for creating scalable, highly available, and fault-tolerant architectures. While each principle offers individual benefits, the real power of scalability emerges when both are combined.

When we decentralize our architecture, we distribute responsibilities among multiple services or components. However, decentralization alone is not enough. If these components constantly need to coordinate with one another or rely on shared resources, they can introduce new bottlenecks, limiting scalability. This is where independence becomes critical. Independence allows these components to work autonomously, ensuring that no single failure or bottleneck can bring down the entire system.

Let’s explore how decentralization and independence complement each other and how they can be applied in real-world scenarios.

Breaking Down Monolithic Applications

In traditional monolithic applications, a single service handles all functions—authentication, payment processing, product management, and more. This centralized approach tightly couples every feature and process, making the system rigid and difficult to scale.

When you apply decentralization, you break this monolith into multiple microservices, each responsible for a distinct function. However, decentralizing the application is just the first step. If these microservices are still dependent on a shared database or a central message broker that handles all communication, your system will still suffer from scalability issues.

Independence removes this reliance. Instead of using a single shared database, you can give each service its own dedicated data store. Similarly, you can adopt asynchronous communication patterns (such as event-driven messaging) to reduce direct dependencies between services. This ensures that each service can scale, fail, and recover without impacting others.

Event-Driven Architecture: A Practical Example

One of the best ways to achieve decentralization and independence is through event-driven architecture. In this model, services communicate by publishing and subscribing to events rather than making direct API calls.

For instance, in an e-commerce platform:

- The Order Service publishes an event when a new order is placed.

- The Inventory Service subscribes to this event and updates stock levels.

- The Notification Service listens for the same event and sends a confirmation email to the customer.

In this setup, each service operates independently. If the Notification Service goes down, it won’t affect order processing or inventory updates. Once it recovers, it can process the missed events without any manual intervention. This independence makes the system far more resilient and scalable.

Avoiding Shared State

Another critical aspect of applying decentralization and independence is avoiding shared state. Shared resources—like a common database, a cache, or a file system—can create a coordination bottleneck.

For example, if multiple services need to update the same database table, they must coordinate to prevent conflicts. This coordination reduces scalability, as the database becomes a single point of contention.

To avoid this:

- Use separate data stores for each service to minimize contention.

- Adopt eventual consistency instead of strong consistency for distributed systems. This means services can update their data independently and resolve inconsistencies over time, rather than blocking on synchronous updates.

- Implement CQRS (Command Query Responsibility Segregation) to separate read and write models, reducing contention on shared resources.

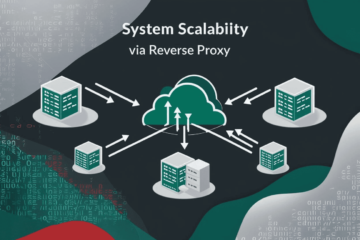

Decentralized Load Balancing and Service Discovery

Decentralization and independence are essential even at the infrastructure level. In horizontally scaled systems, you must ensure that traffic is distributed evenly across multiple instances. This is where load balancers and service discovery come into play.

- Load balancers act as reverse proxies, distributing client requests across multiple service instances. They help reduce the risk of overwhelming any single instance, ensuring consistent performance and availability.

- Service discovery enables services to locate each other dynamically. Instead of hardcoding the addresses of service instances, service discovery allows new instances to register themselves and be discovered automatically. This independence from static configurations makes the system more scalable and resilient.

For example, in a Kubernetes cluster, services are automatically registered, and internal DNS provides the service discovery mechanism. Combined with load balancing, this ensures smooth scaling and failover.

Fault Isolation and Resilience

A highly decentralized and independent architecture also improves fault isolation. In a monolithic system, a single failure—such as a slow database query—can bring down the entire application. In contrast, a decentralized, independent system can isolate failures and prevent them from cascading.

For example:

- If the Payment Service experiences high latency, other services like the Product Catalog or Order Fulfillment can continue to function.

- You can implement circuit breakers to detect failing services and prevent them from being overwhelmed by new requests.

- Retry and backoff mechanisms allow services to recover gracefully without creating excessive load.

Scaling Different Components Independently

The beauty of combining decentralization and independence is the ability to scale different components independently based on demand. In a typical application, not all services will experience the same load. For instance:

- The Authentication Service may only see high traffic during login spikes.

- The Product Catalog Service may need to scale more aggressively during a sale event.

- The Order Processing Service may require high scalability only during checkout peaks.

By applying these principles, you can allocate resources where they’re needed most, optimizing cost and performance.

Monitoring and Observability

Finally, decentralization and independence also improve monitoring and observability. With a monolithic application, identifying the root cause of a performance issue can be challenging. In a decentralized system, you can monitor each component separately, gaining better insights into system health and performance.

Tools like Prometheus, Grafana, and ELK Stack (Elasticsearch, Logstash, Kibana) allow you to monitor metrics, logs, and traces for each service independently, making it easier to detect and resolve issues.

Horizontal Scaling vs. Vertical Scaling

Before we move on, it’s important to distinguish between the two primary scaling strategies:

- Vertical Scaling: Also known as scaling up, vertical scaling involves upgrading the hardware (CPU, memory, storage) of a single instance to handle more load. While this approach is simple to implement, it has limitations and is not suitable for large-scale systems.

- Horizontal Scaling: Also known as scaling out, horizontal scaling involves adding more instances of a service to handle increased demand. This approach is more complex to implement but offers virtually unlimited scalability.

Horizontal scaling aligns perfectly with the principles of decentralization and independence, making it the preferred approach for modern cloud-native architectures.

Conclusion

In summary, the key to building a scalable architecture lies in following the principles of decentralization and independence. Decentralization allows you to break down your system into smaller, specialized components, while independence ensures that these components can operate without creating bottlenecks. Together, these principles form the foundation of horizontal scaling and microservices-based architecture.

In future posts, we’ll dive deeper into how to implement these principles using practical tools and patterns like service discovery, event-driven architecture, load balancers, and container orchestration platforms such as Kubernetes.

0 Comments