Concurrency in modern systems is both a blessing and a curse. On one hand, it unlocks the potential of multi-core CPUs and parallel processing. On the other hand, it introduces challenges like lock contention—a silent performance killer. In this article, we’ll explore some practical strategies for minimizing lock contention and improving system performance, based on lessons learned in the trenches.

Let’s break this down step-by-step.

Reduce Lock’s Duration

The simplest way to minimize contention is to reduce the time a lock is held. This sounds obvious, but I’ve seen countless systems where locks are unnecessarily prolonged due to poor design choices.

How to Reduce Lock Duration:

- Move Non-Essential Code Outside the Critical Section:

Keep the code inside the lock as minimal as possible. Any operation that doesn’t directly require locking—like logging, external calls, or complex computations—should be moved out.

Example:

In a legacy system I worked on, the logging function was embedded inside the critical section. This meant every thread spent extra time holding the lock while logging to disk—a classic mistake. Moving the logging call outside the lock reduced contention significantly. - Avoid Blocking Operations Inside Locks:

Operations like I/O, database queries, or network calls inside a locked block can lead to threads waiting unnecessarily, especially if the external operation is slow. This creates a ripple effect, increasing contention and reducing throughput.

Always ask yourself: “Does this code really need to be inside the lock?”

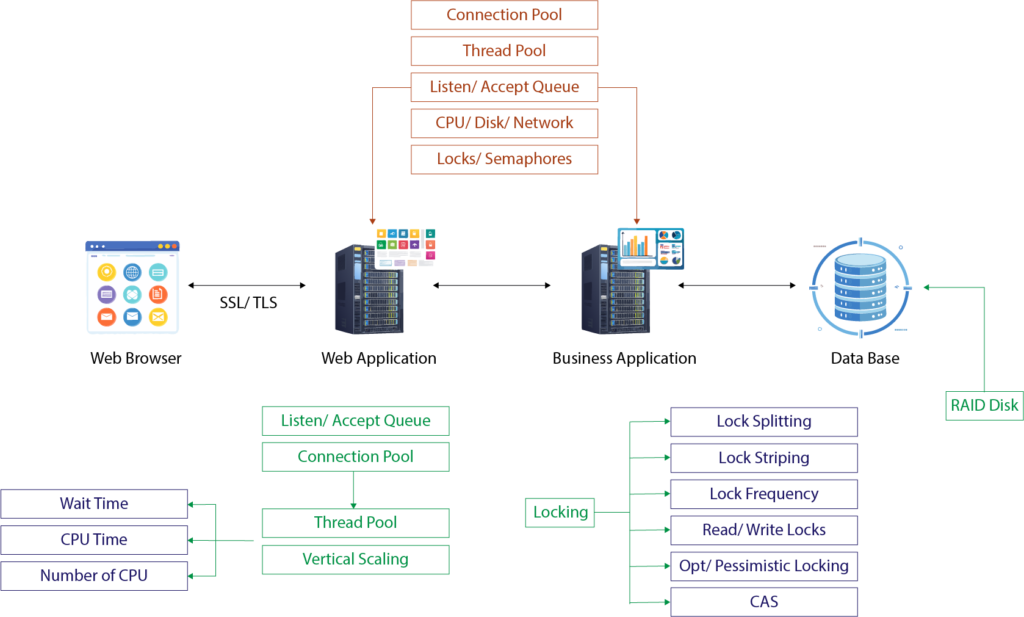

Split Locks for Granularity

Another effective approach is lock splitting, where you break a single coarse-grained lock into multiple finer-grained locks. This reduces contention by allowing threads to work on different parts of a resource concurrently.

Example of Lock Splitting:

Let’s say you have a global lock protecting a shared data structure. Instead of locking the entire structure, divide it into smaller, independent sections, each with its own lock.

Real-World Scenario:

In a caching layer I helped optimize, we initially used a single lock to manage all cached keys. This caused severe contention during high traffic. By partitioning the cache into smaller segments, each with its own lock, we drastically reduced contention and improved performance.

Use Lock Striping

Lock striping is an advanced variation of lock splitting, commonly seen in concurrent data structures like ConcurrentHashMap. Instead of locking an entire data structure, the resource is divided into segments, each with a separate lock.

How It Works:

Consider a ConcurrentHashMap in Java. The map is internally divided into 16 segments (by default), and each segment has its own lock. When you modify a specific key, only the corresponding segment’s lock is engaged, leaving other segments free for concurrent access.

Benefits of Lock Striping:

- Reduces contention by localizing locks to smaller sections.

- Scales better with increased concurrency.

Example:

In one project, we replaced a global lock on a hash table with a striped lock implementation. This allowed multiple threads to update different segments simultaneously. The performance improvement wasn’t linear (e.g., 16x for 16 segments), but it was substantial enough to handle the increased load without degrading user experience.

Optimize with Read/Write Locks

In scenarios with frequent reads and occasional writes, traditional exclusive locks can cause unnecessary contention. Enter read-write locks, which allow multiple readers to access a resource simultaneously while ensuring writers have exclusive access when needed.

How Read-Write Locks Work:

- Readers: Multiple threads can acquire the read lock concurrently, as long as no writer is holding the write lock.

- Writers: A writer thread must wait for all readers to release their locks before acquiring the write lock. Similarly, new readers must wait for the writer to finish.

When to Use Read-Write Locks:

- Data structures with a high read-to-write ratio.

- Scenarios where minimizing read contention is crucial.

Example:

I once worked on a configuration management service where 90% of the operations were reads. Switching to a read-write lock allowed simultaneous reads, which significantly boosted performance during peak load.

Consider Optimistic Locking

While traditional locks are pessimistic (assuming contention will happen), optimistic locking takes the opposite approach: it assumes contention is rare and acts only when it occurs.

How Optimistic Locking Works:

Optimistic locking doesn’t lock the resource up front. Instead:

- The thread reads the resource.

- It performs its operation.

- Before committing changes, it checks if the resource has been modified by another thread. If not, it applies the changes; otherwise, it retries.

Real-Life Use Case:

Optimistic locking is common in databases. For example, in a UPDATE query, you can include a WHERE clause that ensures the row’s version hasn’t changed since it was read. If another thread modifies the row in the meantime, the update fails, and the thread retries.

Explore Compare-and-Swap (CAS) Mechanisms

CAS is a low-level, non-blocking synchronization primitive. It’s often used in lock-free algorithms and data structures.

How CAS Works:

CAS operates on three values:

- The current value of a variable.

- The expected value.

- The new value to set if the current value matches the expected value.

If the current value doesn’t match the expected value, CAS retries until it succeeds. This avoids locking altogether and can significantly improve performance in highly concurrent systems.

Example of CAS:

Many Atomic classes in Java (like AtomicInteger) use CAS internally. These classes allow thread-safe operations without traditional locks.

Monitor and Iterate

No optimization strategy is complete without monitoring. Track metrics like:

- Lock wait times.

- Contention rates.

- Throughput and latency.

Modern observability tools can help you identify hotspots and validate the impact of your changes.

Summary

Minimizing lock contention requires a mix of thoughtful design, informed decisions, and regular tuning. The key is to identify where contention is occurring, apply the appropriate techniques, and measure the results.

In my experience, a well-optimized locking strategy can transform a sluggish system into a high-performance one. Stay tuned for the next article, where we’ll explore pessimistic vs. optimistic locking and dive deeper into compare-and-swap mechanisms.

0 Comments