When discussing concurrency-related latency, two primary factors come into play: queuing delays and coherence delays. We’ve already delved into queuing and the measures to address its impact. Now, let’s shift focus to coherence-related delays—a nuanced but critical aspect of system performance.

What is Coherence

Coherence relates to shared data in multi-threaded systems. Specifically, it deals with ensuring that all threads working with shared data have a consistent and up-to-date view of that data.

Without proper coherence mechanisms, threads can work on stale or incorrect data, leading to unpredictable behaviour or outright system errors.

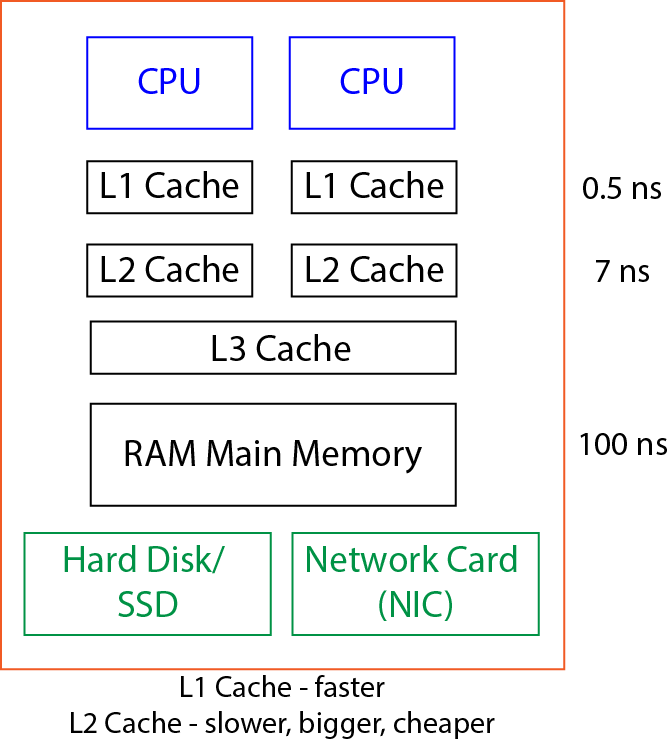

Imagine you have two threads—Thread A running on CPU1 and Thread B running on CPU2—both accessing the same shared variable. Each CPU has its own cache (L1, L2, registers) to speed up computations. When these threads interact with shared data, the following can happen:

- Each thread reads the shared variable into its local cache.

- Thread A modifies the variable in CPU1’s cache.

- Thread B, unaware of this modification, continues working with the stale value in CPU2’s cache.

This lack of synchronization results in coherence issues, where the state of shared data becomes inconsistent across threads.

Real-World Scenario

Consider a scenario where one thread signals another using a shared flag.

If Thread A sets the flag to true but this update doesn’t propagate immediately to Thread B, Thread B might remain unaware of the change, leading to delays or failures.

Addressing Coherence-Related Delays

To handle coherence issues, programming languages provide constructs like synchronized blocks, locks, or volatile variables. Let’s break these down.

1. Synchronized Constructs (Locking Mechanisms)

In languages like Java, using a synchronized block ensures both mutual exclusion (only one thread accesses the critical section) and visibility (changes are propagated across threads). When data is guarded with synchronization:

- Any write to the shared data by one thread is propagated to main memory.

- Other threads are forced to read the latest value from memory instead of their caches.

Pros:

- Guarantees correctness by synchronizing updates and reads.

- Prevents stale reads or writes.

Cons:

- Performance Cost: Accessing main memory is significantly slower than accessing CPU caches.

- Latency increases as thread contention rises.

Here’s a Java-like example for better clarity:

synchronized (sharedObject) {

sharedObject.value = 42;

}2. Volatile Variables

In scenarios where full synchronization isn’t required, you can use volatile variables. Declaring a variable as volatile ensures that:

- Changes to the variable are immediately visible to all threads.

- Threads don’t use stale values from their caches.

However, unlike synchronization, volatile doesn’t guarantee atomicity. For example:

volatile int sharedFlag = 0;

// Thread A

sharedFlag = 1;

// Thread B

if (sharedFlag == 1) {

// React to the update

}Trade-Offs

- Reduced Overhead: Volatile avoids the locking penalties of synchronized constructs.

- Visibility Guarantees Only: It doesn’t prevent race conditions where multiple threads modify the same variable.

Coherence Costs

The cost of coherence arises from the time required to maintain consistent views of shared data across threads. This involves:

- Invalidating caches: When one CPU modifies shared data, it marks other caches holding the data as dirty.

- Memory latency: Accessing main memory (100 ns) is significantly slower than accessing L1/L2 caches (~0.5–7 ns).

Coherence costs grow with:

- Increased sharing: More threads accessing the same data increase the frequency of coherence operations.

- Frequent updates: Variables modified often by multiple threads exacerbate delays.

Optimizing for Coherence

Coherence-related delays can’t be eliminated but can be mitigated. Here’s how:

- Minimize shared data access: Reduce the number of threads accessing the same variables.

- Reduce update frequency: Minimize how often shared variables are modified.

- Favor local computation: Where possible, perform calculations in thread-local variables and synchronize only when necessary.

- Use atomic operations: For simple updates, consider atomic classes like

AtomicIntegerin Java.

C# Code Example: Optimistic Locking

Here’s an example demonstrating optimistic locking to address concurrency issues while minimizing coherence costs:

public class ProductInventory

{

private readonly object _lockObject = new object();

public int Quantity { get; private set; }

public ProductInventory(int initialQuantity)

{

Quantity = initialQuantity;

}

public bool TryReserve(int amount)

{

lock (_lockObject)

{

if (Quantity >= amount)

{

Quantity -= amount;

return true;

}

return false;

}

}

}

public class Program

{

public static void Main()

{

var inventory = new ProductInventory(10);

// Simulating multiple threads

Parallel.For(0, 15, (i) =>

{

if (inventory.TryReserve(1))

{

Console.WriteLine($"Thread {i} reserved 1 item. Remaining: {inventory.Quantity}");

}

else

{

Console.WriteLine($"Thread {i} failed to reserve. Remaining: {inventory.Quantity}");

}

});

}

}In this example:

lockensures mutual exclusion while updating the shared resource.- Threads attempting to reserve more than the available quantity fail gracefully.

Final Thoughts

Coherence delays are an unavoidable part of multi-threaded systems. While constructs like synchronized or volatile help mitigate issues, they come with performance trade-offs. Balancing these trade-offs requires careful consideration of your system’s concurrency patterns and performance needs.

By understanding and addressing coherence costs, you can design architectures that strike the right balance between correctness and efficiency.

0 Comments