Caching is one of the most impactful tools for optimizing system performance. It enables systems to respond faster by reducing the time it takes to access frequently requested data, dramatically lowering latency, and improving overall user experience. Proper caching can significantly reduce the load on backend systems, improve scalability, and make your applications more resilient. However, implementing caching effectively requires an in-depth understanding of your system’s architecture, including the types of data you handle, where to store it, and how to manage cache invalidation to avoid serving outdated or stale content.

In this article, we’ll dive deep into caching strategies from a system architecture perspective, with a focus on practical techniques to improve your system’s performance. Drawing from my own experience, I’ll walk you through best practices for caching, provide real-world code examples, and outline how caching resources can elevate your architecture. Whether you’re building an enterprise system or a smaller-scale web application, understanding caching is crucial to optimize system performance and scalability.

Understanding the Role of Caching in System Performance

Caching is essentially about storing frequently accessed data closer to where it’s needed.

By keeping the data in memory, it reduces the need for repeated queries to the backend, drastically cutting down the time and resources required to fetch it. Efficient caching is one of the most effective ways to enhance system performance and reduce the pressure on databases, APIs, or other backend resources. However, caching is not a one-size-fits-all solution, and understanding what data should be cached is key to maintaining both performance and system integrity.

Generally, the data that benefits from caching falls into two categories:

- Static Data: This type of data rarely changes and is requested often. It includes images, CSS files, JavaScript files, or even pre-rendered HTML content. Because these files don’t change frequently, caching them significantly reduces the load on the backend.

- Dynamic Data: This is data that changes frequently, such as user session data, real-time notifications, or personalized query results. Dynamic data is more challenging to cache due to its volatility. However, caching can still improve performance if done correctly—balancing freshness and performance.

The art of caching lies in striking the right balance. Caching static content can offer significant performance boosts without much complexity, but caching dynamic content requires more care. Over-caching dynamic data or under-caching static data can both lead to inefficiencies, either by loading unnecessary data into memory or by serving stale content to users.

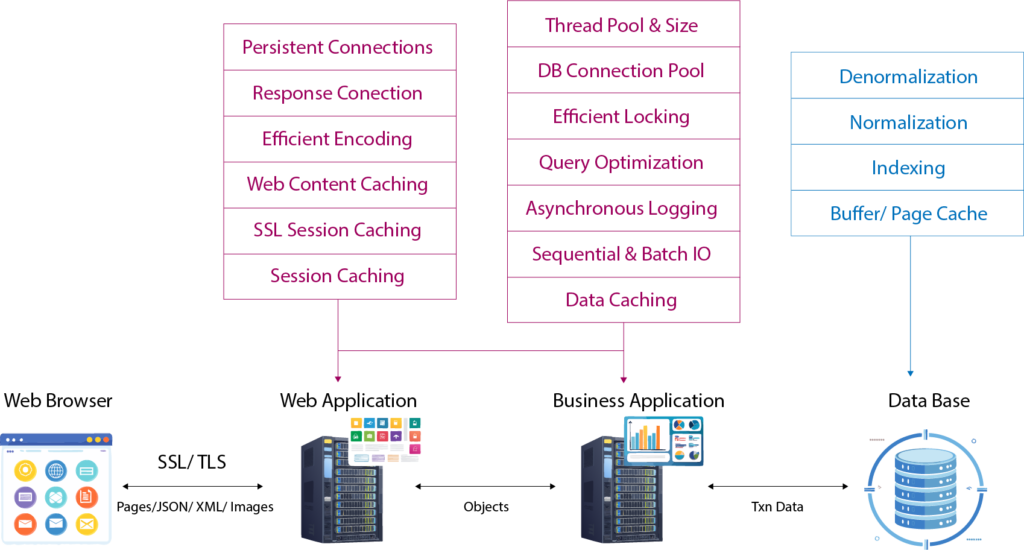

Caching Layers in System Architecture

To achieve optimal system performance, caching should be implemented across multiple layers of the architecture. Here are the main areas where caching can make a significant difference:

1. Backend (Database Caching)

The first and most common place to implement caching is at the backend, specifically in the database layer. By caching results from database queries in memory, we avoid hitting the database every time the same data is requested. This reduces response times and decreases the load on your database.

Use Case: Shared data that is not tied to a specific user, such as product catalogs, configuration settings, or reference data, is ideal for database-level caching.

Example Code:

public class ProductService

{

private readonly IMemoryCache _cache;

private readonly DbContext _context;

public ProductService(IMemoryCache cache, DbContext context)

{

_cache = cache;

_context = context;

}

public Product GetProduct(int id)

{

return _cache.GetOrCreate($"Product_{id}", entry =>

{

entry.AbsoluteExpirationRelativeToNow = TimeSpan.FromMinutes(5); // Cache expires in 5 minutes

return _context.Products.Find(id); // Fetch from database if not found in cache

});

}

}By caching the result of the GetProduct method, repeated requests for the same product do not require querying the database, drastically improving system performance.

2. Service Layer Caching

Caching at the service layer is also effective, particularly when you want to avoid redundant calls to the database or external APIs. For example, in systems with multiple users, caching user-specific data such as preferences or session details can save significant computation time.

Use Case: User-specific data that changes infrequently, like user preferences or dashboard data, can be cached for the duration of a session.

Example Code:

public class UserService

{

private readonly IDistributedCache _cache;

public UserService(IDistributedCache cache)

{

_cache = cache;

}

public async Task SetUserPreferencesAsync(string userId, string preferences)

{

await _cache.SetStringAsync($"UserPrefs_{userId}", preferences, new DistributedCacheEntryOptions

{

AbsoluteExpirationRelativeToNow = TimeSpan.FromHours(1) // Cache expires after one hour

});

}

public async Task<string> GetUserPreferencesAsync(string userId)

{

return await _cache.GetStringAsync($"UserPrefs_{userId}");

}

}Here, user preferences are cached to reduce the number of calls to the backend, improving performance and reducing latency.

3. Web Application (Session Caching)

Caching at the web application layer is often used for user-specific, dynamic data. This is especially important for personalized experiences, such as user authentication tokens or session-specific data, which are often reused within the same session.

Use Case: Caching session data like authentication tokens, personalized recommendations, or shopping cart contents for a faster user experience.

Static Content Caching

Static content, such as images, CSS files, JavaScript files, and other assets, are prime candidates for caching. This type of data is often requested multiple times by clients and doesn’t change frequently. Caching static content at various levels of your system reduces the load on your web servers and minimizes redundant network calls.

Reverse Proxy/Load Balancer: Static files can be cached directly at the reverse proxy level, such as in NGINX. This prevents the web server from needing to handle repeated requests for the same files, significantly improving system performance.

Example Configuration (NGINX):

location /static/ {

root /var/www/static;

expires 30d; # Cache static files for 30 days

add_header Cache-Control "public";

}Content Delivery Networks (CDNs): CDNs cache static resources at edge locations around the world, providing users with faster access to files by reducing the physical distance between users and the data center.

Browser Caching: Leveraging browser-side caching ensures that static resources are stored locally on users’ devices, further reducing server load and improving response times.

Dynamic Content Caching: Optimizing for Freshness and Performance

Caching dynamic content is more complex than static content, but it is essential to keep systems performant. When caching dynamic data, you need to manage cache expiration and invalidation to ensure that users don’t get stale or inconsistent information.

- Object Caching: Caching dynamic data, such as computed results or frequently accessed database queries, in-memory (e.g., using Redis) can reduce the need for repeated calculations or database queries.

- Cache Invalidation: Cache invalidation ensures that data is not served from the cache after it becomes outdated. You can use two primary strategies for invalidating caches:

Time-based invalidation: Set expiration times for cached items (e.g., using TTL—Time To Live).

Event-based invalidation: Invalidate cache entries when specific events occur, such as database updates or user actions.

Optimistic Locking for Dynamic Caching

Optimistic locking is essential when working with dynamic data that may be modified concurrently. It helps manage updates without causing data conflicts, ensuring that your cache and database stay in sync.

Example Code:

public class ProductService

{

private readonly DbContext _context;

public ProductService(DbContext context)

{

_context = context;

}

public bool UpdateProductQuantity(int productId, int newQuantity)

{

var product = _context.Products.SingleOrDefault(p => p.Id == productId);

if (product == null) return false;

product.Quantity = newQuantity;

try

{

_context.SaveChanges();

return true;

}

catch (DbUpdateConcurrencyException)

{

Console.WriteLine("Concurrency conflict detected. Update failed.");

return false;

}

}

}This example illustrates how to manage data updates efficiently in a concurrent environment, maintaining system consistency.

Conclusion

Caching is an indispensable tool in the optimization toolbox of any architect or system engineer. When implemented effectively, caching can be a game-changer for system performance, reducing latency, easing the load on backend resources, and creating a more responsive and scalable system. By strategically caching static and dynamic content at various layers—ranging from the backend database to the web application and client-side—it’s possible to improve both speed and efficiency while ensuring the system remains reliable and scalable as it grows.

However, caching is not a “set it and forget it” solution. It requires careful planning, continuous monitoring, and a solid understanding of the specific needs of your system. Over-caching can lead to memory bloat and potential performance degradation, while under-caching may result in unnecessary load on backend resources. Furthermore, managing cache invalidation is crucial to ensure that users don’t experience stale or outdated data, which can lead to inconsistencies and a poor user experience.

In practice, optimizing for system performance through caching involves making decisions based on the architecture of your application, the nature of the data, and the specific challenges you face, such as user concurrency, data volatility, and data access patterns. While the benefits of caching are clear, the implementation must be tailored to the unique demands of your system, considering factors such as system reliability, cache expiration strategies, and the use of distributed caching systems for scaling.

0 Comments